What Is An AI Server? Understanding Artificial Intelligence Servers

AI, or artificial intelligence, is changing the way organizations and businesses handle data by incorporating automation of complex calculations, introducing new advanced applications, and fulfilling computational demands like never before.

This is where AI server clusters stand out, crafted for HPC (High-Performance Computing), enormous amounts of data, and very demanding AI workloads. Some of these operations involve deep learning, image recognition, and natural language processing. From running large language models to perfecting generative AI, a server capable of handling these modern demands is no longer a necessity; it’s a must.

For organizations looking to effectively handle modern demands, dedicated AI servers offer a reliable solution with specialized hardware, high-speed networking, and ample RAM.

To cover modern requirements, here at ServerMania, we offer a range of options, including colocation for AI infrastructure, managed AI server solutions, and cloud-based AI servers, ensuring organizations can deploy, maintain, and scale AI tasks with maximum efficiency.

In this quick guide, we’ll walk you through everything you need to know before deploying your first AI server configuration, covering most of your burning questions.

Understanding AI Servers

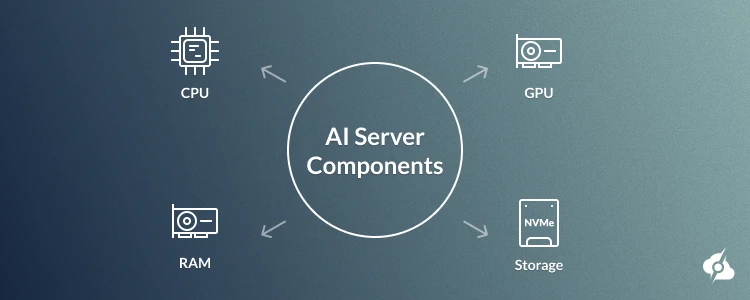

Similar to the regular server configuration, artificial intelligence servers also include a CPU (central processing unit), GPU (graphics processing unit), RAM (memory), and storage (SSD or NVMe).

What sets AI servers apart is definitely their capability of handling modern workloads and specialized hardware within them, tailored for specific needs. We’re speaking of high-core count CPUs, and in many cases, multiple GPUs with high memory, massive amounts of RAM, and, of course, data centers with a high level of care.

This functionality combined is what enables AI servers to support a vast range of resource-intensive workloads, making them critical for most AI frameworks.

AI Server Components: What Makes a Server “AI-Ready”

To understand the essential factors that set AI servers apart from regular server configurations, we’re going to take a quick look at each key component:

CPU Throughput:

The most important piece in any server configuration, not only AI servers, is, of course, the powerful CPU, responsible for the calculations. And here, for AI workloads, not every CPU can get the job done, as you need a processor that can run 24/7, be power efficient, support various critical features such as ECC memory, and be powerful enough to handle the load.

Some of the CPUs capable of fulfilling AI workloads are the latest Intel Xeon CPUs or AMD chips from the EPYC series. This is the most important piece from the hardware components that provides the computing power needed to run large models, multiple AI tasks, neural networks, and large datasets.

In short, you need maximum efficiency from the AI server CPU.

High-Power GPUs:

The second place is surely secured by the GPU, or even multiple GPUs, when it comes to AI servers, due to a specific feature called “AI acceleration“. Even though the CPU is responsible for the main computational force, GPUs can enhance various AI workloads like computer vision, generative AI, deep learning, and image recognition via parallel execution.

Some honorable mentions here would definitely be NVIDIA L4 Tensor Core and NVIDIA H100, which can provide high bandwidth memory and really unmatched processing power. These GPUs can train large-scale AI models faster and improve AI server performance in various ways. When this is combined with a strong CPU and high-speed networking, an AI server can easily meet the latest demands of AI.

See Also: What is a GPU Dedicated Server?

Memory & Storage:

Ample RAM and fast storage are at the center of an AI server configuration, which can manage large datasets and data sources quickly and efficiently.

AI servers typically utilize NVMe SSDs, which are incredibly faster when compared to regular HDDs, providing high-bandwidth memory. This immediately opens the doors for tasks like AI applications, data analytics, natural language processing, and many more.

However, in addition to fast storage, proper hardware and software integration are critical for achieving the maximum efficiency expected from an AI server. Also, it’s important to note and try to optimize the power consumption as much as possible, since energy efficiency is another critical factor when dealing with high-performance AI workloads that utilize the hardware 24/7.

Cooling Systems:

Last but definitely the cooling of hardware running intense computational demands like training large models and running multiple AI tasks at the same time. Even though server processors are designed for such kinds of utilization, air cooling may not always be enough, which is where liquid cooling comes in.

It’s critical to provide your AI server with the cooling it requires to enhance the AI server’s performance and prevent potential throttling when the thermal margin is exceeded. Some large-scale AI models and other AI training tasks could push your CPU to the brink of overheating, so deploying a cooling solution that can handle the heat is critical for any computationally heavy AI workload.

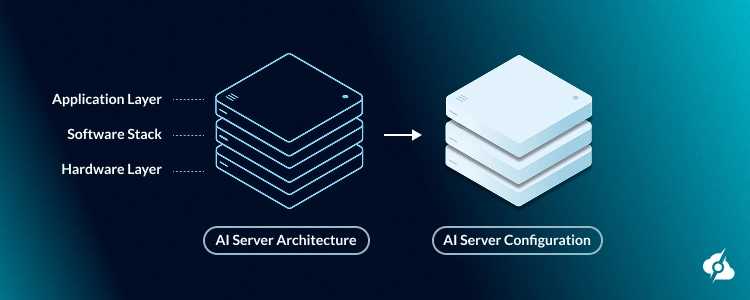

AI Server Architecture

The AI server architecture is designed in a way to combine and balance several critical factors that, in most cases, include hardware requirements, software frameworks, and specific artificial intelligence AI applications, so everything clicks in and works.

In contrast to the traditional server builds, AI servers combine key features that enable large-scale deployments and comply with the AI workload memory requirements, guaranteeing accurate results and, of course, cost effectiveness.

To understand AI servers better, we need to go through the architectural layers:

Hardware Layer

The hardware is the foundation and the fundamental layer of any AI server, and often includes powerful CPUs, more than one GPU, RAM, and NVMe storage drives. These hardware components are meant to maximize performance, and when combined with management tools and ongoing maintenance, a single server can easily surpass one’s expectations.

With the right balance of compute and memory, organizations can achieve the best efficiency and meet the demands of AI without bottlenecks.

Software Stack

After we have the foundation, comes the software stack, which typically means a specialized operating system, AI frameworks, and libraries.

There are many tools available, like TensorFlow and PyTorch, leveraging the specialized hardware to seamlessly carry out tasks such as deep learning, natural language processing, and computer vision workloads. This layer guarantees that both small and large AI models run effectively while keeping cost-effectiveness in mind.

Application Layer

At the very top is the application-level layer, where AI projects are actually being used as real-world tools, solutions, and problem-solving techniques.

This includes inference engines, generative AI, and AI applications such as image recognition, self–driving cars, and data analytics. By integrating all four layers into a unified AI server architecture, organizations can achieve accurate results, reduce power consumption, and ensure systems remain optimized for demanding workloads.

Advantages & Disadvantages of AI Servers

Just like any other tech, AI servers are extremely efficient in some situations, while they could be a complete overkill, overspending, or even a drawback in other cases. To better understand when AI servers are needed, we need to review their strengths as well as take a look at their disadvantages.

Advantages of AI Servers

AI servers were meant for running AI models, machine learning, and most importantly, processing data, whether it’s a cloud-based AI server or on-premises. Nowadays, many more artificial intelligence AI tasks arise, and the raw processing power needed can’t really be extracted from traditional servers.

Here’s where artificial intelligence servers are best at:

| Advantage: | Description: |

|---|---|

| High Performance | Delivers the processing performance needed for deep learning, generative AI, data, and large language models. |

| Scalability | Can scale across multiple servers for large-scale deployments. |

| Specialized Hardware | Uses GPUs, Intel Xeon CPUs, and high-bandwidth memory to meet hardware requirements. |

| Precise Results | Optimized for AI workloads to produce reliable, proper results. |

| Management Tools | Supports continuous maintenance and performance optimization through management tools. |

| Cost (at Scale) | Reduces costs in the long run for businesses with constant AI needs. |

Disadvantages of AI Servers

While powerful, AI servers aren’t always the best option for every project. Their hardware requirements, memory requirements, and energy needs can be high, making them less practical for smaller workloads or limited budgets, or even for some specific AI workloads.

| Disadvantage: | Description: |

|---|---|

| High Upfront Costs | Purchasing or leasing dedicated AI servers requires a significant investment. |

| Energy & Cooling | Power consumption and liquid cooling demands can raise operational expenses. |

| Complex Maintenance | Requires skilled teams for ongoing maintenance and fine-tuning. |

| Overkill for Small Tasks | For lighter AI applications or simple data analytics, performance may exceed requirements. |

| Limited Cost Effectiveness | It may not justify expenses for businesses that don’t need large-scale deployments. |

The key takeaway here is to carefully balance hardware and software to achieve the performance you’re looking for, whether your server is considered to be “AI” or not. In many real-world scenarios, the demand is not constant, and overpaying for a physical AI machine may not be the best move.

That’s why we encourage you to explore ServerMania cloud-based AI servers!

Types of AI Servers: Training, Inference, and Hybrid

It’s difficult to categorize all artificial intelligence servers under one label, “AI servers,” as most of them differ in various aspects, based on whether you’re training AI models, supporting AI inference, machine learning, neural networks, etc…

We’re going to break down AI servers in different categories:

Training Servers

Undoubtedly, the training servers are the machines that require a massive amount of processing power, and when you hear “AI server”, it’s probably a training server.

These are the servers that come with the highest-end hardware components, such as multiple graphics cards, EPYC series AMD CPUs, and top-tier on-premises support. For instance, cloud solutions will not be the best choice if you find yourself dealing with massive workloads such as training large AI models.

Inference Servers

On the other hand, inference servers are the machines that are running the already trained models, and unlike the training servers, they rely mostly on networking rather than raw power.

The cloud-based AI server here is going to be the perfect choice, but even if on-premises, you don’t need a massive amount of computing power, especially when AI training is not involved. Many are also designed for edge deployment, meaning they can be placed closer to where data is generated, which reduces delay and improves responsiveness in time-sensitive applications.

Hybrid AI Servers

The hybrid servers are like the middle ground here, both for training and inference. These systems are highly flexible, supporting development, testing, and production on the same infrastructure. Businesses often choose a hybrid when they need versatility without investing in separate systems for each task.

Here at ServerMania, we offer tailored configurations that allow businesses to scale their resources as projects grow, combining cost efficiency with reliable performance.

AI Servers Use Cases:

AI servers can already be found fully deployed and functional in various industries:

Healthcare:

AI servers can be found in many applications, such as medical imaging, drug discovery, personalized medicine, and more. They help analyze massive amounts of data to come up with accurate diagnoses and treatment plans. AI servers in healthcare are critical to analyze massive amounts of data with the necessary precision and provide accurate reports.

Finance:

AI servers run fraud detection, algorithmic trading, and risk management applications in the financial sector. They gain insight into the derivation of better decision-making methods. Also, AI servers in the fintech industry enable real-time analysis of huge data sets, hence helping financial institutions detect fraudulent activities and optimize trading strategies.

See Also: How to Optimize a Server for Big Data Analytics

Science:

AI servers are part of thousands of datasets from experiments, simulations, and observations, allowing scientists to analyze and interpret the results at extraordinary speeds and precision. The AI capabilities in data analysis and modeling tasks can be used by researchers to understand complicated biological systems, chemical reactions, and physical phenomena.

Entertainment:

AI servers are also important in the development of content and cloud gaming, offering experiences to users by processing complex graphics and AI-driven enrichment. Also, AI servers in the entertainment industry help in creating immersive content, like virtual reality experiences and generated animations.

AI Tasks Server Requirements:

As we’ve already mentioned, building an edge AI server is not only about performance, but also about blending software and hardware efficiently.

If you’re looking to deploy an AI server, whether it’s for heavy machine learning or you only want to support AI inference, that’s the minimum you’ll need:

| Component: | Recommendation: | Notes: |

|---|---|---|

| CPU | 16+ cores (Intel Xeon / AMD EPYC) | Provides the parallel processing needed to handle complex AI tasks and coordinate GPU workloads. |

| GPU | NVIDIA RTX 4000 or better | Accelerates training and inference, especially for deep learning and computer vision tasks. |

| RAM | 64GB minimum, 256GB+ optimal | Ensures large datasets can be processed in memory without bottlenecks. |

| Storage | 2TB+ NVMe SSD | Delivers high-speed data access for training, inference, and analytics without delays. |

| Network | 10Gb minimum | Supports fast data transfer between nodes in multi-server setups or cloud environments. |

See Also: Explore AMD EPYC AI Servers!

Handle Your AI Workloads with ServerMania!

Here at ServerMania, with over two decades of experience in high-performance computing, we deliver powerful infrastructure for artificial intelligence and high-performance computing, offering dedicated servers optimized for demanding workloads.

Our enterprise-grade hardware allows you to deploy server configurations for anything from training and inference to large-scale data projects and compute-intensive edge AI workflows.

Our AI-ready data centers are designed for reliability and performance, offering businesses all of the computing foundation they need to innovate without limits.

We provide a range of solutions to match different business needs, including:

- GPU servers for AI: Accelerate training and inference with high-performance GPUs.

- Database Servers for AI: Optimized for handling structured and unstructured datasets.

- Cloud AI Servers: Flexible, on-demand resources for scalable AI development.

- Enterprise AI solutions: Customized deployments for production-ready applications.

🗨️Ready to get started? Book a free consultation or talk to an AI expert today to discover the right AI server solution for your business.

AI Servers FAQ:

What makes a server an AI server?

An AI server is built with specialized hardware and software designed to handle the high computational demands of training and running AI models.

Do I need a GPU for an AI server?

Yes, while CPUs handle coordination, GPUs are essential for accelerating deep learning and large-scale AI workloads with specific requirements.

How much does an AI server cost?

Costs vary widely, from a few thousand dollars for entry-level setups to hundreds of thousands for enterprise-grade configurations. Many factors matter here, such as hardware, software, fine-tuning, data center tier, and more.

Can I convert my existing server to an AI server?

In some cases, yes—adding GPUs, more RAM, and optimized storage can adapt a traditional server for AI workloads, but performance may be limited.

What’s the difference between training and inference servers?

Training servers focus on building and fine-tuning models, while inference servers are optimized for running those models in real-time.

How much RAM do AI servers need?

A minimum of 64GB is recommended, but 256GB or more is ideal for handling large datasets and complex AI projects that demand more memory.

Was this page helpful?