CPU vs GPU: Comparing Key Differences Between Processing Units

Understanding what sets Central Processing Units (CPU) and Graphics Processing Units (GPU) apart in modern computing systems is a leverage for any AI/ML engineers, game developers, and enthusiasts.

These processing units are critical in providing us with high-performance computing for anything from machine learning to graphics rendering.

At ServerMania, we’ve helped hundreds of businesses, research teams, and professionals design their own infrastructure that balances high throughput, low latency, and power consumption for everything from parallel computing clusters to complex simulations.

Whether you’re evaluating GPU server hosting solutions, exploring dedicated servers, or building scalable cloud infrastructure for neural networks and data processing, our expertise ensures your hardware choice delivers the performance your applications demand.

What is a CPU? (Central Processing Unit)

A central processing unit (CPU) is the most important piece in absolutely every computer system and is responsible for the sequential processing. The main goal of a CPU is to handle general computing tasks that are critical for any computing system.

The CPU executes instructions, manages multitasking, and performs logic via the control unit and memory management unit; throughput and parallelism depend on CPU cores vs threads.

CPU history dates back to the early days of computing devices. Initially, CPUs were large with a room-sized dimension in the mid-20th century. As time goes on, and with advancing technology, CPUs have grown into something that contains billions of transistors in the palm of your hand.

In a nutshell, the processing speeds that have been incorporated with multiple cores are what allow us to execute everyday tasks such as web browsing and hyper complex calculations that businesses need.

What is a GPU? (Graphics Processing Unit)

Contrasting the CPU, a graphics processing unit (GPU) is a specialized processor crafted for parallel processing, which is necessary for deep learning tasks, graphics rendering, and large-scale data processing. The highly parallel tasks need GPUs mainly for their parallel processing capabilities, since the sequential nature of CPUs won’t perform well.

The GPU cores are optimized for parallel computation, and that’s why GPUs stand out in managing multiple operations simultaneously. This is what makes GPUs perfect for applications that require powerful parallel processing capabilities and high data throughput.

GPUs started to become popular because of all the fuss around video games back in the 1990s. Many businesses, developers, and organizations were pushing the parallel capabilities of the GPUs to improve graphics rendering, but since then, GPUs have grown beyond gaming and are now critical pieces for simulations, machine learning, and AI processing tasks.

CPU vs GPU – Key Differences

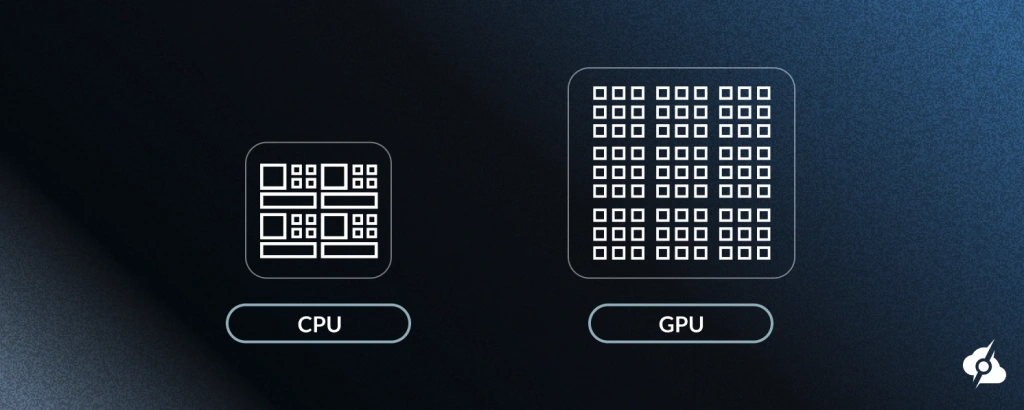

- CPUs (Central Processing Units) are general-purpose processors optimized for sequential task execution and complex decision-making.

- GPUs (Graphics Processing Units) are specialized parallel processors designed for simultaneous computation of thousands of simple operations.

CPU Architecture | Deep Dive

The CPU, or Central Processing Unit, is the master of your computer system. This is the brain and the control center that keeps everything running with low latency.

Cores, Threads, and Cache

In the core of every CPU, there are CPU cores, each of which is sequential, but multiple cores are what allow the parallel execution of separate applications. Technologies such as Simultaneous Multithreading (SMT) are what allow a single core to offer multiple threads, which can handle more instructions at once.

On the other hand, we have the cache, which is ultra-fast memory inside the CPU that stores frequently used data. This helps the CPU automate and speed up repetitive calculations.

That’s not all, there’s also cache hierarchy:

- L1 cache is the fastest but smallest

- L2 offers a size balance and speed

- L3 cache connects all CPU cores

Clock Speed, Turbo & Heat

Another fundamental aspect in CPUs that determines how fast the chip can execute instructions is the clock speed. Some modern CPUs have clock speeds ranging from around 2.2 GHz, like the Intel Core i5-12400, up to an ungodly 5.5 GHz seen in the Intel Core i9-13900K.

Turbo Boost can dynamically increase the operational speed of a CPU when it’s needed, but only works when the temperature allows it. For example, if your processor is too hot and overloaded, the turbo will not engage, and you may even face thermal throttling (overheating).

See Also: GPU Optimal Temperature Range

CPUs SIMD Instruction Set

SIMD is specifically designed to accelerate complex calculations, which are mainly involved in AI and machine learning applications. It works by allowing a CPU to execute one instruction on multiple data points simultaneously, and there are a few SIMD types:

- SSE: The SSE instruction set can process 128-bit vectors, which is four numbers at a time, and makes it great for the most basic parallel processing tasks.

- AVX: AVX steps up to 256-bit vectors, which ultimately doubles the data that can be processed per instruction and makes it perfect for graphics processing.

- AVX-512: AVX-512 processes up to 512-bit vectors, which ultimately boosts the throughput for deep learning workloads in the AI and ML environment.

Single-Core vs. Multicore

We all know that multicore is much more efficient than a single-core performance when it comes to the speed and performance of a CPU. However, single-core is still critical for sequential loads that do not require processing in parallel, and solely rely on low latency.

In turn, multicore is great with parallel processing in CPUs, and works best with nearly 90% of the tasks nowadays, making it ideal for pretty much anything.

Quick Note: Some CPUs offer high-performance integrated graphics within the same chip!

GPU Architecture | Deep Dive

GPUs are designed to handle the most demanding processes in parallel, unlike CPUs, which process data sequentially.

Multiprocessors and CUDA Cores

GPUs contain the so-called “streaming multiprocessors (SMs), which are full of cores optimized for parallel workloads. They use the so-called warp/wavefront execution model, which can run threads simultaneously thanks to the SIMT architecture.

SMs also come with shared memory and hardware schedulers that are mainly responsible for the data movement between cores and memory. This setup allows GPUs to work best in tasks where graphics rendering and deep learning matter the most.

See Also: AMD vs NVIDIA GPU Comparison

Memory (GDDR vs. HBM)

GPUs use two main memory types: GDDR and HBM.

While GDDR offers excellent speed and capacity for anything from gaming and video work, HBM offers much higher bandwidth and lower power consumption, making it an excellent pick for data processing and machine learning applications. They both support dedicated memory that helps GPUs handle high-throughput workloads without potential bottlenecks.

Tensor Cores and AI Acceleration

Tensor cores are specialized processors inside GPUs focused on accelerating artificial intelligence and neural networks. They perform matrix math faster than general cores, powering advanced machine learning and deep learning models. These cores are key for future computing needs, enabling AI at scale in data centers and beyond.

See Also: How to Set Up and Optimize GPU Servers for AI Integration

CPU vs GPU: Performance and Architecture Comparison

If you don’t know whether to choose CPU or GPU for your latest project, you need to learn more about their architecture, speed, power draw, and more.

Let’s start with a full technical comparison of some of the most crucial factors based on whether you need a chip for parallelism or general computing.

| Feature | CPU | GPU |

|---|---|---|

| Architecture | Complex cores, sequential | Simple cores, parallel (SIMT) |

| Core Count | 2–64 cores | 1,000–16,000+ cores |

| Clock Speed | 2.0–5.5 GHz | 1.0–2.5 GHz |

| Memory Bandwidth | 50–200 GB/s | 200–3,000 GB/s |

| Power Withdrawal | 35–280 W | 75–700 W |

| L1 Cache Size | 32–128 KB per core | 16–64 KB per SM |

| L2 Cache Size | Up to 30 MB | Up to 12 MB |

| Threads | 2–128 threads (with SMT) | Thousands of threads |

| Instruction Type | Complex, out-of-order | Simple, in-order SIMT |

| Typical IPC | 1–4 Instructions per cycle (IPC) | N/A (parallel throughput focus) |

| Programming | C, C++, Python, Rust | CUDA, OpenCL, Vulkan |

| Cost per FLOP | Higher | Lower |

| Best For | General computing, low latency, sequential workloads | Parallel workloads, graphics, and AI |

See Also: What is the Best GPU Server for AI and Machine Learning?

CPU Vs GPU | Bottleneck Analysis

It’s critical to also understand where CPUs and GPUs’ weaknesses are. We’re going to review some of the critical bottlenecks to help you grasp their performance limitations.

| Bottleneck | Description | Impact |

|---|---|---|

| CPU in Parallel Workloads | Limited CPU cores and weaker parallel processing capabilities | Slower performance in machine learning, graphics rendering, and other parallel workloads |

| GPU in Sequential Processing | Optimized for throughput, not low-latency sequential workloads | Inefficient in general computing and operating systems, requiring complex logic |

| PCIe Bandwidth Limitations | Data transfer bottleneck between CPU and discrete GPU due to limited PCIe lanes and versions | Reduced high data throughput, affecting real-time processing |

| Memory Capacity Constraints | CPUs rely on caches and RAM; GPUs use optimized memory like GDDR or HBM | Limits the size of deep learning loads, complex simulations, and other memory-heavy workloads |

See Also: How to Build a GPU Cluster for Deep Learning

AI and Machine Learning: CPU vs GPU

Training Neural Networks

Training deep neural networks involves intensive computations, particularly matrix operations, which GPUs handle more efficiently than CPUs.

For instance, a Microsoft Azure demonstration using TensorFlow showed that training a model on a GPU took 4 minutes, compared to 17 minutes on a CPU, highlighting the GPU’s parallel processing design.

Moreover, a comparative analysis of CPU and GPU profiling for machine learning models indicated that GPUs offer significantly lower running times and better memory allocation during training, making them more suitable for large-scale AI tasks.

Inference Performance

For inference tasks, GPUs again outperform CPUs, especially when handling large models or high-performance computing to process data in parallel.

A case study on deploying machine learning models demonstrated that GPU clusters consistently delivered better throughput than CPU clusters, even when the cost was comparable, underscoring the GPU’s efficiency in real-time artificial intelligence applications.

Additionally, a benchmark comparing machine learning inference performance across different batch sizes revealed that GPUs significantly reduced processing time, particularly as batch sizes increased, making them ideal for applications requiring rapid predictions.

When to Choose CPU and GPU

When you’re about to make your decision, whether to choose CPU and GPU, it’s best to know some of the most popular real-world use cases for both chip architectures. While both CPUs and GPUs have their distinct strengths, understanding their core functionality and main processing functions is critical.

AI and Machine Learning

GPUs excel in machine learning thanks to their specialized cores and special hardware, enabling fast computation with thousands of cores and their own memory dedicated to high-throughput parallel processing. CPUs often handle data preparation and orchestration, but rely on GPUs for the heavy parallel tasks involved in machine learning.

General Purpose Computing

For everyday general-purpose computing tasks like web browsing, office apps, or running operating systems, CPUs are preferred due to their flexibility, optimized sequential capability, and ability to handle diverse instructions.

Some modern CPUs include an integrated GPU on the same chip, providing basic graphics rendering without needing separate hardware.

Video Editing & Rendering

Video editors and 3D artists benefit from GPUs’ high parallelism and optimized memory, speeding up graphics rendering, video editing, and real-time previews.

CPUs still manage timeline edits and effects that require sequential processing and complex decision-making, showing how both CPUs and GPUs often work in tandem.

Cryptocurrency Mining

Mining cryptocurrencies demands specialized hardware with high efficiency and throughput. GPUs, with their thousands of cores and dedicated memory, dominate mining for coins like Ethereum.

CPUs can participate but are much less efficient due to fewer cores and lower parallel computing, making GPUs the preferred choice for large-scale mining rigs and other high-performance financial simulations.

See Also: Best GPUs for Mining 2025

Deploy Optimal CPU and GPU Infrastructure with ServerMania

Choosing the right CPU or GPU infrastructure not only requires expert guidance but also personalized solutions. Here, at ServerMania, we offer custom server configurations with specialized hardware, including GPU server hosting solutions and dedicated servers.

Whether you need scalable AI server infrastructure or powerful NVIDIA GPU servers, ServerMania provides flexible options backed by 24/7 support and global data centers. We also provide managed services and migration assistance to ensure smooth deployment and operation.

➡️ Contact ServerMania support team and get started today!

Was this page helpful?