Kubernetes Clusters on Dedicated Infrastructure: Architecture & Deployment

Kubernetes GPU clusters on bare metal infrastructure provide you with direct access to GPUs and full control over the physical hardware. Running bare metal GPU Kubernetes on physical servers removes abstraction from the underlying server hardware.

This setup improves application workload performance for AI training, inference, batch jobs, and live video streaming. In short, it fits all teams with strict performance and reliability requirements.

Here at ServerMania, we provide bare metal deployments for enterprises running Kubernetes through our GPU Hosting Solutions and GPU Server Clusters. We offer bare metal servers in secure data center environments with high availability and a production-ready Kubernetes control plane.

This guide explores how bare metal Kubernetes GPU clusters on physical infrastructure offer enhanced performance, full control, and predictable scaling for enterprises. We’re going through the key points, including advantages and challenges, architecture, deploying bare metal Kubernetes, and much more!

How Kubernetes Manages GPUs on Dedicated Infrastructure

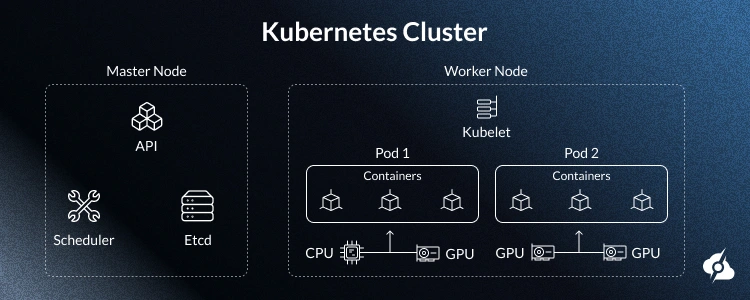

The bare metal Kubernetes handles GPUs through native hardware discovery and direct scheduling on physical servers. There is no hypervisor layer or abstraction between container orchestration and the underlying hardware. Therefore, every GPU attaches directly to a bare metal machine, which gives the Kubernetes cluster full visibility into hardware resources.

One outcome matters most – you get GPUs exposed as first-class schedulable resources!

GPU Resource Discovery and Scheduling

GPU discovery starts at the node level and runs automatically on each bare metal machine. The Node Feature Discovery scans the physical hardware and identifies GPUs through PCI enumeration, using the NVIDIA vendor ID 0x10de.

Once detected, Kubernetes nodes receive automatic labels such as:

feature.node.kubernetes.io/gpu=true..along with metadata for GPU count and model.

The process of “labeling” quickly allows the Kubernetes cluster to prepare and eventually schedule the workloads only on specific nodes that contain the specific hardware resources required for the job. This not only improves the production accuracy but also protects the app’s performance on multiple nodes.

Next, we’re going to show you a rule that instructs the Kubernetes scheduler to attach only 1 GPU to a pod and then restrict the placement to nodes that run the same GPU model. The goal here is to prevent any accidental scheduling on physical machines that are incompatible.

Here’s how it works:

resources:

limits:

nvidia.com/gpu: 1

nodeSelector:

nvidia.com/gpu.product: "NVIDIA-L4"After the pod begins, Kubernetes reserves the card at the node level, which prevents other workloads from using the same GPU. This guarantees consistent isolation and very reliable scheduling in the setup.

See Also: What is a Kubernetes Cluster?

Bare Metal vs Virtualized GPU Access

Modern GPU workloads quickly expose the limits of cloud virtualization, no matter how powerful the environment is, due to access restrictions.

Kubernetes on bare metal runs directly on physical hardware, while a VM based kubernetes inserts a hypervisor layer between workloads and GPUs. This difference affects throughput, latency, scheduling accuracy, and long-term stability.

For enterprises with strict reliability requirements and GPU-dense workloads, bare metal infrastructure offers a cleaner and more predictable execution model.

Why Kubernetes on bare metal wins for GPU workloads:

- Direct PCIe access allows GPUs to communicate with the CPU and memory without interception, increasing throughput and reducing latency under sustained load.

- No nested virtualization complexity removes scheduler contention and eliminates failure points introduced by virtual machine stacks.

- Full CUDA capability exposure ensures workloads access the complete CUDA feature set without limitations imposed by virtualized drivers.

- Native GPU-to-GPU communication enables faster data exchange between devices during distributed training and parallel inference tasks.

- NVLink and GPUDirect RDMA support improves inter GPU bandwidth and reduce network traffic during large-scale model training.

- MIG support on A100 and H100 allows precise GPU partitioning for multi tenancy over Kubernetes namespaces.

Here’s a quick comparison:

| Feature: | Bare Metal: | Virtual Machines: |

|---|---|---|

| GPU access | Direct | Abstracted |

| Overhead | None | 10–15% |

| Control | Full control | Limited |

| Performance | Enhanced performance | Variable |

Did You Know❓

NVIDIA GPU Operator automates GPU management in Kubernetes through five components: Driver Containers, Container Toolkit, Device Plugin, DCGM Monitoring, and GPU Feature Discovery. On bare metal, this provides direct PCIe access without virtualization overhead, enabling 10-15% better performance than cloud instances.

See Also: Docker vs Kubernetes

Kubernetes GPU Clusters on Bare Metal | Pros & Cons

Running bare metal Kubernetes with GPUs delivers big gains in performance, control, and efficiency, but it also introduces operational tradeoffs. Unlike managed services or VM-based clusters, you own the full stack from the underlying hardware to the Kubernetes control plane.

Here, we’re going to break down where Kubernetes on bare metal wins and where it has a significant challenge for platform teams.

Advantages of Bare Metal Kubernetes

Bare metal Kubernetes stands apart from VM-based Kubernetes by removing abstraction layers and exposing physical hardware directly to the Kubernetes cluster.

This architecture benefits GPU-intensive workloads where performance, cost efficiency, and control determine some of the end and essential infrastructure decisions.

Here are some of the standout advantages:

- No Virtualization Overhead: Eliminating the hypervisor layer allows workloads to access the underlying server hardware directly, which increases throughput.

- Decreased Network Latency: Running Kubernetes on bare metal reduces network latency by up to three times compared to virtual machines, improving data transfer.

- Lower Infrastructure Costs: Removing hypervisor licensing fees and simplifying operations helps enterprises reduce costs for long-running GPU workloads.

- Full Infrastructure Control: Teams gain full control over hardware configurations, systems, and container runtimes, enabling precise tuning for specific HW requirements.

- Stronger Security Posture: Direct control over bare metal infrastructure supports granular security policies and removes exposure to hypervisor-level attack surfaces.

- Optimal Fit for AI and HPC: Bare metal deployments support zero-bottleneck GPU access and high bandwidth data preloading for AI, HPC, and inference.

- Max Hardware Utilization: Providing direct access to hardware resources allows applications to fully utilize GPUs, CPUs, and memory without virtualization overhead.

Challenges of Bare Metal Kubernetes

While you achieve high availability, complete control, and maximum performance with Kubernetes on bare metal, there are quite a few downsides that we must not overlook.

These challenges affect day-to-day operations, scaling speed, and fault tolerance when compared to VM-based clusters and cloud platforms.

Here are some of the biggest challenges:

- Manual Provisioning: To scale with bare metal Kubernetes, you need to go through the process of purchasing, on-site installing, networking configuration, and much more.

- Higher Complexity: When running Kubernetes on bare metal, contrasting the cloud environments, teams must manage tasks normally handled by a hypervisor layer.

- Much Slower Scaling: If you want to add more capacity, there is a necessity for manual node onboarding and network configuration, rather than instant resource allocation.

- Node FailureImpact: Each bare metal machine acts as a standalone node, so a single node failure affects all containers running on that server.

- Migration Difficulties: Creating image-based backups or migrating workloads between physical machines is harder without virtualization abstractions.

- Storage Complexity: Maintaining performance and consistency across storage layers becomes more difficult as bare metal deployments scale.

- Expertise Requirements: Organizations without experience managing physical hardware and server operating system stacks may struggle to operate clusters efficiently.

So, based on the advantages and limitations, each organization must weigh everything and decide which suits their intentions and makes sense.

Deploying Bare Metal Kubernetes GPU Clusters

Now that we know the key benefits and standout limitations of a bare metal setup, we’re going to walk you through a complete production-grade deployment.

We’re going to explain each step, why you need it, and how to execute it:

Prerequisites and Initial Setup

Before you deploy Kubernetes, every bare metal server must meet the requirements:

- The system is set to Ubuntu 22.04 LTS or RHEL 8.x on allnodes

- Container runtime installed using containerd 1.6+ or Docker 20.10+

- A static IP address should be assigned to each physical machine

- Hostname set for all nodes and disabled swap at the host OS level

- Network connectivity between all worker nodes and control nodes

⚙️Kubernetes tooling required on every node:

- kubeadm

- kubelet

- kubectl 1.28+

- helm 3.12+

Installing Kubernetes on Bare Metal

The deployment starts by initializing the Kubernetes control plane on the first master node. This step creates the cluster root, configures core services, and defines the network range used by the pods.

The command below sets a stable API endpoint for the cluster and prepares certificates:

sudo kubeadm init \

--control-plane-endpoint="k8s-api.example.com:6443" \

--upload-certs \

--pod-network-cidr=10.244.0.0/16Once initialization completes, kubectl access must be configured for cluster administration. This allows you to interact with the Kubernetes cluster from the control plane node.

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configKubernetes needs a pod network to enable communication between worker nodes. So, installing Calico establishes routing for containers running across multiple nodes.

kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/v3.26.0/manifests/tigera-operator.yamlJoining Control Plane and GPU Worker Node

High availability starts by adding additional control plane nodes, and this step distributes cluster state and protects against node failure.

The join command securely connects new master nodes to the existing Kubernetes control plane:

sudo kubeadm join k8s-api.example.com:6443 \

--token <token> \

--discovery-token-ca-cert-hash sha256:<hash> \

--control-plane --certificate-key <cert-key>Next, GPU-enabled worker nodes join the cluster.

These nodes host GPU workloads and execute containerized applications!

sudo kubeadm join k8s-api.example.com:6443 \

--token <token> \

--discovery-token-ca-cert-hash sha256:<hash>After joining, nodes receive labels to guide scheduling. This ensures GPU workloads run only on nodes with the correct specific hardware.

kubectl label nodes gpu-worker-1 \

node-role.kubernetes.io/gpu-worker=true \

feature.node.kubernetes.io/gpu=trueDeploying the NVIDIA GPU Operator

The NVIDIA GPU Operator automates the GPU driver installation, plugin deployment, and monitoring across the bare metal Kubernetes cluster. This removes manual driver management and standardizes GPU behavior on all nodes.

In short, Helm simplifies the installation and ensures all operator components deploy consistently!

helm repo add nvidia https://helm.ngc.nvidia.com/nvidia

helm repo update

kubectl create namespace gpu-operatorThe above command installs the operator with production settings. It enables monitoring, MIG support, and GPU feature discovery.

helm install gpu-operator nvidia/gpu-operator \

-n gpu-operator \

--version v23.9.1 \

--set driver.enabled=true \

--set driver.version=535.129.03 \

--set toolkit.enabled=true \

--set devicePlugin.enabled=true \

--set dcgmExporter.enabled=true \

--set gfd.enabled=true \

--set migManager.enabled=true \

--set nodeStatusExporter.enabled=true \

--waitVerification and Testing ✅

GPU time slicing improves utilization by allowing multiple workloads to share a single GPU. This setup supports multi tenancy while maintaining isolation.

kubectl apply -f - <<EOF

apiVersion: v1

kind: ConfigMap

metadata:

name: device-plugin-config

namespace: gpu-operator

data:

any.yaml: |

version: v1

sharing:

timeSlicing:

renameByDefault: false

failRequestsGreaterThanOne: false

resources:

- name: nvidia.com/gpu

replicas: 4

EOFLastly, a simple CUDA job will verify GPU access at runtime. Successful output confirms the containers are running with direct access to the GPU.

apiVersion: batch/v1

kind: Job

metadata:

name: gpu-test

spec:

template:

spec:

containers:

- name: cuda-test

image: nvidia/cuda:12.2.0-base-ubuntu22.04

command: ["nvidia-smi","-L"]

resources:

limits:

nvidia.com/gpu: 1

restartPolicy: Never

nodeSelector:

node-role.kubernetes.io/gpu-worker: "true"See Also: What is Containerization?

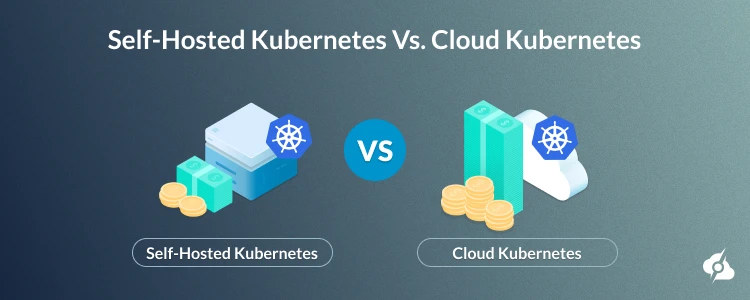

Self-Hosted vs Cloud Kubernetes: Cost Comparison

Kubernetes GPU clusters running on cloud-managed platforms such as EKS, GKE, or AKS carry high recurring costs that scale rapidly with GPU workloads.

Each GPU node includes fees for compute, storage, and network traffic, along with additional charges for the control plane and load balancers. When your data science team runs machine learning tasks or deep learning pipelines 24/7, the total cost often rivals full-time on-prem deployments.

Cloud Managed Kubernetes Costs

In a standard setup with 10 x GPU nodes, each using 2x NVIDIA L4 GPUs, cloud compute expenses dominate. To illustrate it, a single GPU instance in these environments costs around $5.67 per hour. Hence, adding network usage, storage, and control plane charges, the total reaches about ~$42,000 per month. Therefore, annual TCO rises to over $500,000, and the 3-year total exceeds $1.5 million.

Self-Hosted Infrastructure Costs

Deploying the same Kubernetes cluster on bare metal through ServerMania significantly reduces your spending. A GPU dedicated server setup with 10 worker nodes and 3 master nodes runs about $14,500 per month, including networking, management overhead, and GPU hardware.

The GPU nodes use1 x AMD EPYC Series and 2x NVIDIA L4 GPUs per server for the best parallel computing performance. Total TCO reaches roughly $522,000 over three years.

3-Year TCO and ROI Analysis

The savings could be substantial, as monthly expenses drop by 65%, cutting $27,000 from operational costs. Over one year, that means $331,000 saved.

Over three years, the savings reach $993,000, while performance remains equal or better due to direct PCIe access and complete CUDA compatibility.

A simplified comparison:

| Year(1-3) | Cloud Kubernetes (EKS/GKE/AKS) | Self-Hosted(Bare Metal) | Total Savings |

|---|---|---|---|

| 1 | $505,000 | $174,000 | $331,000 |

| 2 | $505,000 | $174,000 | $331,000 |

| 3 | $505,000 | $174,000 | $331,000 |

| Total (3 Years) | $1,515,000 | $522,000 | $993,000 |

⚠️Disclaimer: The numbers are example estimates, and actual pricing depends on factors such as region, market conditions, hardware availability, and specific configuration choices.

Kubernetes Bare Metal | Hardware Sizing

Choosing the right hardware for bare metal Kubernetes GPU clusters directly affects your performance, stability, and cost efficiency. On bare metal infrastructure, sizing errors surface fast because workloads run with direct access to physical hardware and no hypervisor layer to absorb mistakes.

Here we’ll align common Kubernetes GPU use cases with proven hardware configurations built around NVIDIA L4 Tensor Core GPUs with the help of ServerMania.

Development and Test Clusters

Development clusters prioritize flexibility and low entry cost. A typical setup uses one master node and two to four GPU worker nodes inside a bare metal Kubernetes cluster.

NVIDIA L4 GPUs provide sufficient compute for experimentation, CI pipelines, and model validation without overcommitting hardware resources.

This layout fits teams iterating quickly on containerized applications before promotion to production.

Production Inference Clusters

Inference clusters focus on throughput, latency, and uptime. A common architecture uses three HA control plane nodes and seven to seventeen GPU workers.

NVIDIA L4 GPUs paired with GPU time slicing support multi tenancy across Kubernetes namespaces while maintaining predictable performance. These clusters handle real-time APIs, recommendation systems, and streaming inference with consistent app workload performance across multiple nodes.

Large Scale Training Clusters

Training-oriented clusters emphasize bandwidth and coordination across many GPUs. Deployments typically include three to five HA masters and twenty or more GPU workers. NVIDIA L4 GPUs scale horizontally with fast networking to support distributed AI/ML training and large batch workloads.

This configuration suits organizations training large models on on-premises bare metal infrastructure, where long-running jobs and cost predictability matter.

| Deployment Type | Nodes | GPUs per Node | CPU and RAM | Network | Use Case |

|---|---|---|---|---|---|

| Development Test | 3 to 5 | 1x L4 | 16 cores, 64GB | 10GbE | Model development, testing |

| Small Production | 5 to 10 | 2x L4 | 32 cores, 128GB | 25GbE | Under 50K queries per day |

| Medium Production | 10 to 20 | 2x L4 | 32 cores, 256GB | Dual 25GbE | 50K to 500K queries per day |

| Large Inference | 20 to 50 | 4x L4 | 64 cores, 512GB | 100GbE | 500K plus queries per day |

| Training Focused | 10 to 30 | 4x L4 | 64 cores, 512GB | 100GbE | Distributed training workloads |

See Also: How to Build a GPU Cluster for Deep Learning

Operating Kubernetes GPU Clusters at Scale on Bare Metal

To understand what operating Kubernetes with GPU Clusters at scale feels like, we’re going to walk you through some common scenarios and risk-management strategies. We’ll cover common practises and show you what matters to Kubernetes users the most, especially after the environmental deployment.

🔹Handling Node Failure and Stability

Clusters composed of dedicated machines behave differently from virtualized environments. When one node experiences failure, every workload scheduled on it stops immediately.

So, events such as an operating system kernel panic highlight the importance of spreading workloads across multiple host servers to limit the blast radius. This model will provide more control, but it also requires disciplined operational processes.

🔹Backups, Rebuilds, and Provisioning

Bare metal environments favor fast reprovisioning over live migration.

For instance, the Tinkerbell cluster API provider supports repeatable provisioning workflows by treating infrastructure as code. This approach prioritizes predictable rebuilds and allows many more resources to remain allocated for workloads instead of virtualization overhead.

🔹Networking and Load Distribution

As the platforms grow, load balancing becomes critical for maintaining service stability. A proper traffic distribution reduces pressure on individual host servers and improves service availability during all the demand spikes. This design choice directly impacts your latency and throughput under sustained load.

🔹Security and Isolation Considerations

Operating outside a cloud provider shifts the security model. Hence, compared to a public cloud, teams assume full responsibility for addressing security challenges across hardware, networking, and access control. In return, private cloud deployments gain physical isolation and tighter enforcement boundaries.

🔹Choosing the Right Operating Model

The decision between a public cloud and a private cloud depends on your operational priorities. Some organizations prefer the abstraction offered by a cloud provider, while others follow guidance from the cloud native computing foundation and still choose ownership over infrastructure.

However, bare metal aligns with teams seeking predictability, accountability, and direct oversight of production systems, so it’s entirely up to your workload.

⚖️Compare: AMD vs NVIDIA GPU

Switch to Self-Hosted Kubernetes GPU Infrastructure

ServerMania helps enterprises move away from costly cloud Kubernetes platforms by delivering bare metal infrastructure built for GPU workloads. Our NVIDIA L4 Tensor Core GPU servers and purpose-built server clusters support bare metal Kubernetes with predictable performance, direct access to underlying hardware, and full control over the entire stack.

You gain a production platform for AI training, inference, and large-scale containerized applications, backed by expert support and infrastructure designed to scale without cloud lock-in.

Why ServerMania?

We combine high-performance bare metal infrastructure with different hardware and deep Kubernetes expertise to support GPU workloads in production. Here is the ServerMania advantage:

- ✅ Enterprise-grade NVIDIA GPU Servers

- ✅ Flexible configurations & custom builds

- ✅ Global data centers [99.99% uptime SLA]

- ✅ 24/7 expert support for Kubernetes GPUs

- ✅ No long-term contracts, scale as needed

The Migration Path:

Move from cloud to self-hosted Kubernetes in structured phases:

- Start with proof-of-concept (3-node dev cluster)

- Migrate your non-critical workloads first to begin

- Scale the workload gradually and with confidence

- Start the full production migration within 6 months

How to Get Started?

To begin, explore our GPU Servers and Server Clusters to identify hardware, prices, and availability that match your preference and workload.

Then you have several options:

- Schedule Free Consultation: You can book a free consultation completely FREE, and discuss your projects with one of our Kubernetes GPU experts.

- Contact 24/7 Support Line: You can get in touch with ServerMania’s customer support service immediately and receive a custom quote/pricing.

- Place an Order Immediately: If you feel confident in your decision, you can place an order by going through our dedicated servers.

💬Contact ServerMania today – We’re available right now!

Was this page helpful?