Building Private RAG (Retrieval-Augmented Generation) Systems on Dedicated GPUs: Enterprise Infrastructure

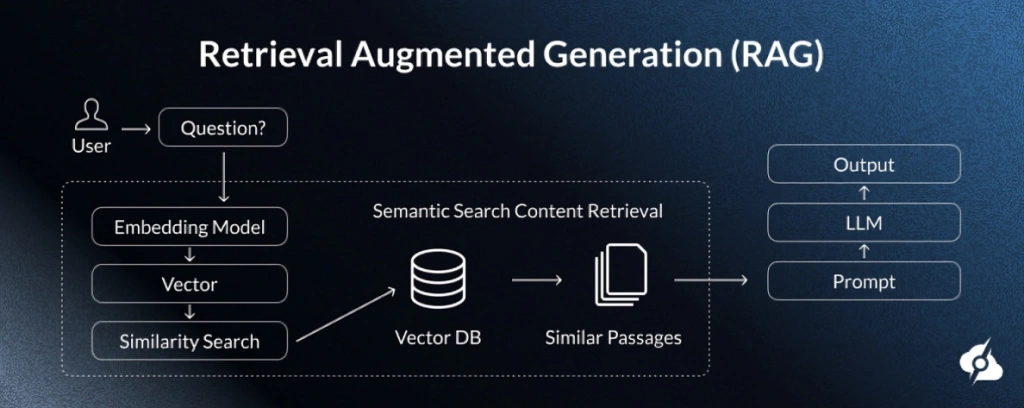

The Retrieval-Augmented Generation (RAG) connects large language models (LLMs) with external data sources to deliver context-aware responses.

Instead of solely relying on pre-trained data, RAG systems use information retrieval to fetch relevant documents, structured data, and unstructured data from multiple data sources. This boosts accuracy and enables real-time data access for enterprise applications. As enterprises move toward generative AI models for question answering systems, private RAG offers better control over the customer data.

ServerMania provides dedicated GPU infrastructure optimized for building and deploying private RAG systems. You gain full control over vector databases, embedding models, and knowledge graphs while keeping enterprise data secure.

In this guide, you’ll learn how to design, deploy, and optimize private RAG systems on dedicated GPUs to achieve secure, cost-efficient, and scalable enterprise AI performance.

See Also: What is a GPU Dedicated Server?

Why Enterprises Are Moving to Private RAG

Public LLM APIs expose enterprise data to external vendors and increase long-term operational costs. Private RAG systems address these issues by combining retrieval augmented generation with dedicated infrastructure. They allow retrieving relevant information without sending customer information to any third-party services, enhancing privacy.

Enterprises in healthcare, finance, and government are moving to private RAG to meet GDPR, HIPAA, and SOC 2 requirements; hence, running retrieval techniques on servers or private clouds ensures that relevant documents and retrieved knowledge stay within your control.

This model also reduces dependency on public APIs, ultimately reducing computational and financial costs, and improves response accuracy by connecting models directly to verified internal data sources.

Private RAG LLM & Infrastructure Requirements

As mentioned, RAG, or Retrieval-Augmented Generation, connects large language models with your company’s own data sources. The RAG systems retrieve relevant documents from internal or external databases before generating a response.

This process helps the model deliver “context-aware”, accurate responses based on your enterprise data. RAG is ideal for use cases like legal contract analysis, healthcare record searches, financial SEC filing analysis, and technical documentation Q&A, where accuracy and data privacy matter the most.

Teaching LLMs to Use Your Documents

RAG works by separating two tasks: retrieval and generation.

The system first performs a search across the structured and unstructured data to find relevant data. Then the large language model generates an answer using that retrieved context. This makes the generated response specific to your retrieved data with the fluency of a traditional generative model.

Four Critical Infrastructure Components

RAG systems require four core components:

- Vector Database: Stores document embeddings for semantic and hybrid search. These workloads are CPU-intensive and benefit from high-memory servers.

- Embedding Model: Converts documents and user queries into numerical representations called vectors. These models run efficiently on CPUs or small GPUs.

- LLM Inference: Generates answers using retrieved context. This process requires dedicated GPUs to maintain low latency and high throughput.

- Orchestration Layer: Manages the flow between retrieval and generation, ensuring smooth execution across all components.

A hybrid CPU-GPU setup delivers the best performance for RAG.

Vector databases and embedding models run on CPU servers optimized for memory and indexing. LLM inference runs on GPU servers to accelerate generation. If combined, they create a scalable retrieval workflow that keeps all critical/sensitive data protected while delivering real-time, accurate responses.

RAG Architecture on Dedicated Infrastructure

The enterprise-grade RAG systems perform best on dedicated servers.

Each component in the retrieval augmented generation demands specific compute/memory resources. The dedicated hardware eliminates resource contention, improves reliability, and ensures that sensitive enterprise data stays private. Hence, such a setup supports fast semantic search, efficient embedding generation, and low-latency LLM inference without relying on shared virtualization/cloud environments.

See Also: What is the Best GPU Server for AI and Machine Learning?

Overview and Hardware Recommendations

1. Vector Database (CPU-Intensive)

The vector database stores document embeddings and performs semantic or hybrid search across structured and unstructured data. The dedicated CPU servers with high memory ensure fast indexing and query performance, making them ideal for the job.

| Element: | Details: |

|---|---|

| Function | Stores document embeddings and performs semantic or hybrid search across structured and unstructured data. |

| Recommended Options | Qdrant (<10M vectors). Weaviate (feature-rich).Milvus (enterprise-scale). |

| Hardware Requirements | 128 GB+ RAM NVMe storage 32+ CPU cores |

🔹 ServerMania Recommendation: Dual EPYC 9634 with 84 cores and 256 GB RAM for consistent performance.

2. Embedding Model (CPU or Small GPU)

The embedding models convert text into numerical vectors for semantic search, and running them on dedicated servers ensures consistent throughput and integrates smoothly with the vector database.

| Element: | Details: |

|---|---|

| Function | Converts text into numerical vectors for semantic search. |

| Model Options | Sentence-transformers and models developed by Facebook AI Research, now Meta AI (FAIR) |

| Performance | CPU: 50–100 embeddings/sec NVIDIA T4 GPU: 500–800 embeddings/sec |

🔹 ServerMania Recommendation: Run embedding models on dedicated CPU servers hosting the vector database for balanced throughput and cost efficiency.

3. LLM Inference (GPU-Required)

LLM inference generates accurate answers using the context retrieved from your training data. Here, the dedicated GPU servers ensure low latency and high throughput for enterprise-scale RAG systems.

| Element: | Details: |

|---|---|

| Function | Generates answers using the retrieved context from enterprise data. |

| Model Sizes | 7B (Mistral-7B, Llama-3-8B): 1–2× RTX 4090.13B (Llama-2-13B): 2× RTX 4090, or 1× A6000.70B (Llama-2-70B): 4× A100, or 2× H100. |

| Serving Frameworks | vLLM (production) TGI (Hugging Face)Ollama (development) |

🔹 ServerMania Recommendation: 2× RTX 4090 dedicated GPU servers for high-throughput 7B–13B model inference.

4. Orchestration Layer (Lightweight)

The orchestration layer manages the flow between retrieval and generation while coordinating multiple AI agents that handle caching, API requests, and context retrieval.

Dedicated servers can easily keep this layer responsive without overloading anything.

| Element: | Details: |

|---|---|

| Function | Coordinates retrieval and generation, handles caching, batching, and API calls. |

| Frameworks | LangChainLlamaIndexFastAPI setup |

| Caching | Redis with a 70–90% cache hit rate to reduce GPU load and latency. |

🔹 ServerMania Recommendation: 2–4 CPU cores, 8 GB RAM, hosted on a dedicated node or shared with a vector database server.

Bottom line: Dedicated infrastructure provides full control, compliance, and consistent performance for enterprise RAG systems.

It eliminates dependency on shared resources and public APIs while supporting secure, scalable retrieval augmented generation workflows within your organization.

Sizing RAG Infrastructure for Deployment Scale

Sizing your RAG infrastructure depends on the number of documents, query volume, and model size. Dedicated servers provide consistent performance, fast retrieval, and secure handling of enterprise data, so here is a quick breakdown for small, medium, and large RAG.

Small RAG (Department-Level)

Small deployments support up to 1 million documents and 1,000 queries per day. They focus on cost efficiency while delivering accurate, context-aware responses.

- Vector Database: 1× CPU server, 64 GB RAM, 1 TB NVMe

- LLM Inference: 1× RTX 4090 (7B model)

- Use Case: Knowledge Library or Internal Wiki Q&A

Explore: ServerMania’s NVIDIA GPU Servers

Medium RAG (Company-Wide)

The medium deployments, on the other hand, handle 1–10 million documents and about 1,000–10,000 queries per day. They balance performance, redundancy, and scalability for enterprise AI applications.

- Vector Database: 2× CPU servers, 128 GB RAM, 2 TB NVMe each

- Embedding Model: 1× NVIDIA L4 TensorCore (dedicated)

- LLM Inference: 2× NVIDIA L4 TensorCore (dedicated)

- Use Case: Enterprise Knowledge Management or AI Customer Support

Large RAG (Enterprise-Scale)

Large deployments process over 10 million documents and 10,000–100,000 queries per day. They require the highest-capacity servers and multiple GPUs for low-latency and multi-tenant operations.

- • Vector Database: 4× CPU servers, 256 GB RAM, 4 TB NVMe each

- Embedding Model: 2× NVIDIA L4 GPUs (load balanced)

- LLM Inference: 4× NVIDIA L4 TensorCore

- Use Case: Multi-Tenant RAG Platform or Regulatory Compliance

Explore: ServerMania Enterprise Server Solutions

Here is an easy-to-scan sizing overview:

| Scale | Documents | Queries/Day | CPU Nodes | GPU Config |

|---|---|---|---|---|

| Small | 100K–1M | ~100–1K | 1 | 1× NVIDIA L4 24GB |

| Medium | 1M–10M | ~1K–10K | 2 | 2× NVIDIA L4 24GB |

| Large | 10M+ | ~10K–100K | 4 | 4× NVIDIA L4 24GB |

If you’re curious about your RAG ownership monthly price, feel free to explore:

- Dedicated Server Hosting Pricing Guide

- Cloud Server Hosting Pricing Guide

- Server Colocation Pricing Guide

Deploying Your Private RAG System: Step-by-Step Guide

Deploying your private RAG system requires setting up each component on dedicated servers, which involves a vector database, an embedding model, LLM inference, and orchestration.

So, we have prepared a step-by-step approach to ensure proper integration, secure data handling, and optimized performance.

Step 1: Deploy Vector Database (Qdrant)

The vector database stores document embeddings and handles semantic search. You deploy it first so that all documents can be indexed and retrieved efficiently.

docker run -d \

--name qdrant \

-p 6333:6333 \

-v /mnt/nvme/qdrant_storage:/qdrant/storage \

qdrant/qdrant:latestCreate a collection for your documents:

from qdrant_client import QdrantClient

from qdrant_client.models import Distance, VectorParams

client = QdrantClient(url="http://localhost:6333")

client.create_collection(

collection_name="company_docs",

vectors_config=VectorParams(size=768, distance=Distance.COSINE)

)Index your documents in chunks of 500–1,000 tokens and batch insert 1,000–10,000 at a time. Use HNSW parameters m=16 and ef_construct=100 for fast, scalable search.

Step 2: Set Up Embedding Model

The embedding model converts text into vectors for semantic search; hence, we need to deploy it as a service to encode user queries and documents consistently.

from fastapi import FastAPI

from sentence_transformers import SentenceTransformer

app = FastAPI()

model = SentenceTransformer('sentence-transformers/all-MiniLM-L6-v2')

@app.post("/embed")

def embed_text(texts: list[str]):

embeddings = model.encode(texts)

return {"embeddings": embeddings.tolist()}Performance depends on hardware: CPUs produce 50–100 embeddings per second, while a T4 GPU reaches 500–800 per second.

Step 3: Deploy LLM Inference (vLLM)

LLM inference generates answers using the retrieved context, so we can use the dedicated GPUs to ensure low-latency responses for your queries.

Deploy a 7B–8B model:

docker run -d \

--name vllm \

--gpus all \

-p 8000:8000 \

vllm/vllm-openai:latest \

--model meta-llama/Meta-Llama-3-8B-Instruct \

--max-model-len 4096 \

--gpu-memory-utilization 0.9For multi-GPU 70B models:

docker run -d \

--gpus all \

vllm/vllm-openai:latest \

--model meta-llama/Llama-2-70b-chat-hf \

--tensor-parallel-size 4Step 4: Build Orchestration Layer (LangChain)

The orchestration layer connects the vector database, embedding model, and LLM, so it handles retrieval, query processing, and generates final responses.

from langchain.embeddings import HuggingFaceEmbeddings

from langchain.vectorstores import Qdrant

from langchain.llms import VLLMOpenAI

from langchain.chains import RetrievalQA

# Connect components

embeddings = HuggingFaceEmbeddings(

model_name="sentence-transformers/all-MiniLM-L6-v2"

)

vectorstore = Qdrant(

client=client,

collection_name="company_docs",

embeddings=embeddings

)

llm = VLLMOpenAI(

openai_api_base="http://localhost:8000/v1",

model_name="meta-llama/Meta-Llama-3-8B-Instruct"

)

# Create RAG chain

qa_chain = RetrievalQA.from_chain_type(

llm=llm,

retriever=vectorstore.as_retriever(search_kwargs={"k": 4})

)

# Query example

response = qa_chain("What is our vacation policy?")This setup ensures your RAG system delivers accurate, context-aware answers while keeping all enterprise data on dedicated servers.

Securing Private RAG: Compliance, Optimization, and Monitoring

Running a private RAG system requires strong security, regulatory compliance, and performance tuning, all of which are evident with dedicated servers.

These tailored configurations provide you with control over data, network access, and HW resources while allowing optimization for low-latency responses.

Security and Compliance Requirements

The first step is protecting your data and meeting enterprise compliance requirements. The table below shows the essential security measures for private RAG deployments.

| Requirement: | Details: |

|---|---|

| Network Isolation | ▪️Private VLAN ▪️Bastion Host ▪️Firewall Rules (allowing only necessary ports) |

| Encryption | ▪️TLS 1.3 for all APIs ▪️Full-Disk Encryption |

| Access Control | ▪️SSO (OAuth2/SAML) ▪️RBAC for Document Collections ▪️API Key Rotation Every 90 days |

| Audit Logging | ▪️Log All Queries ▪️Retrieved Documents ▪️LLM responses |

Another important aspect here is that Private RAG deployments also simplify compliance with major standards. Here is how self-hosted infrastructure aligns with GDPR, HIPAA, and SOC2 compliance:

| Standard: | How RAG Helps: |

|---|---|

| GDPR (Article 28) | Self-hosted servers make you the data controller; therefore, there are no third-party processors |

| HIPAA | PHI remains under your control; hence, no BAAs are required for self-hosted deployment |

| SOC2 | Easier to achieve compliance without relying on external cloud vendors or providers |

Performance Optimization Strategies

There are a few strategies that can be used, like retrieval, caching, and model inference optimization, which ensure fast and relevant responses.

Here’s a quick outline that shows the key strategies to improve RAG performance and reduce latency!

| Optimization: | Approach: | Impact: |

|---|---|---|

| Retrieval | Hybrid search (vector + BM25), query expansion, and chunk size 512–1024 tokens | Faster and more relevant matches |

| Re-ranking | Cross-encoder model on top 20 results | +15–20% answer quality, with +100ms latency |

| Caching | Semantic caching with Redis | 70–90% hit rate, and 10–50× speedup on repeated queries |

| Target Latency | End-to-end <2 seconds (embedding 50ms + search 100ms + LLM 1500ms) | Predictable and Smooth User Experience |

When all these security and compliance strategies are working together, the private RAG system offers secure, high-performance operations for enterprise-scale workloads while keeping data under control.

ServerMania RAG Infrastructure Solutions

ServerMania provides dedicated NVIDIA L4 24GB TensorCore GPU servers optimized for private RAG deployments with seamless integration with our services.

Each package includes the right combination of CPU, GPU, and memory to seamlessly handle your document scale and query volume securely and efficiently.

Production Ready ServerMania RAG Offers:

| CPU: | GPU: | Cores/Threads: | RAM: | Storage: | Bandwidth: |

|---|---|---|---|---|---|

| Dual Intel Xeon Silver 4510 | NVIDIA L4 24GB | 20C/40T | 256 GB | 1 TB NVMe M.2 | 1 Gbps, Unmetered |

| Dual AMD EPYC 7642 | NVIDIA L4 24GB | 96C/192T | 512 GB | 1 TB NVMe M.2 | 1 Gbps, Unmetered |

| Dual AMD EPYC 9634 | NVIDIA L4 24GB | 168C/336T | 512 GB | 960 GB NVMe U.2 | 1 Gbps, Unmetered |

The ServerMania Advantage

ServerMania ensures full data sovereignty. Your information never leaves dedicated infrastructure. Enjoy 24/7/365 expert support, fixed monthly pricing with no surprise API bills, and compliance-ready environments built for HIPAA, GDPR, and SOC 2. With global data centers across the US, Canada, and Europe, you can maintain performance and data residency wherever you operate.

Explore: ServerMania’s Managed Server Solutions

How to Get Started?

- Choose Setup: Explore our dedicated and GPU server solutions.

- Contact Sales: Reach out to ServerMania’s team to discuss your needs.

- Get Guidance: Work with our AI infrastructure specialists to design RAG.

💬 If you’re ready to deploy and configure your RAG Infrastructure, schedule a free consultation with a ServerMania expert or contact our 24/7 customer support directly. Our experts are available right now.

Retrieval Augmented Generation RAG – FAQ

What is private RAG?

Private RAG (Retrieval-Augmented Generation) is a self-hosted AI system that answers your users’ questions using internal documents instead of public data. It combines vector search, foundation models, and prompt engineering to generate context-aware responses from your source data.

How much does private RAG cost?

Private RAG infrastructure costs around $5,000–8,000 per month for enterprise workloads. It offers long-term savings over cloud APIs while keeping all retrieval pipelines and sensitive data on your own servers.

How to deploy private RAG?

You can deploy RAG in 3 steps:

- Configure vector database

- Run an embedding model

- Set up your GPU inference

This creates a full retrieval-to-generation pipeline using your own semi-structured and structured data.

How does private RAG handle new data?

Private RAG updates its knowledge base through scheduled indexing of new data sources, including PDFs, web pages, and research reports. So, each data point becomes part of your searchable vector database for more accurate search results.

How does RAG improve response accuracy?

RAG retrieves context from authoritative data sources before generating an answer. The augmented prompt ensures fine-tuned responses within the model’s context window, improving precision and grounding output in verified information.

Why does RAG sometimes produce limited results?

RAG produces limited results when the retrieved information lacks semantically relevant passages or related data. This happens if the vector database has incomplete context retrieval or low-quality embeddings from semi-structured data.

How does Retrieval-Augmented Generation work?

The Retrieval-Augmented Generation (RAG) works by combining information retrieval and natural language processing (NLP). It retrieves relevant information from internal or external knowledge sources, then generates context-aware answers using large language models.

How does RAG handle user input?

RAG converts the user input into vector embeddings to find semantically relevant passages. It then combines the retrieved information with an augmented prompt to generate a precise and grounded response. Sometimes this response is much better than the search engine’s keyword search approach.

How does RAG balance broad knowledge and specific context?

RAG blends the broad knowledge of foundation models with targeted context retrieval from your enterprise data. This mix ensures responses are accurate, contextually aligned, and based on both general and domain-specific information.

Was this page helpful?